Globality is the number-one B2B services marketplace that matches leading companies with small and midsize service firms worldwide for specialized high-value projects. Globality revolutionized the RFP process with AI technology. Millions of people use its Platform and transact over billions of dollars every year. Given the nature of Globality’s high-end enterprise customers, quality is a core value. The goal is to keep the number of bugs that slip to production at 0 to 1, hence the importance of QA. You can’t A/B test with actual users. Globality needs to make sure user experience is exceptional all the time.

GLOBALITY’S PROVIDER NETWORK COVERS SIX CONTINENTS OF THE WORLD’S GDP

In this article Globality will outline how to do the following:

- SET THE RIGHT GOALS FOR YOUR AUTOMATION TRANSFORMATION.

- PRIORITIZE YOUR AUTOMATION EFFORTS—WHAT TO AUTOMATE AND WHAT NOT TO.

- STREAMLINE QA AND DEV WORK WHEN CHANGE IS CONSTANT.

- USE ARTIFICIAL INTELLIGENCE (AI) TO TEST AN AI-BASED SYSTEM.

01 – Set the right goals for your automation transformation

It is important to set clear and achievable goals when identifying any business objective. According to the 2017–2018 World Quality Report, the goals of automated testing that were surveyed from 1,660 senior executives included the following:

- Increasing the quality of the product.

- Ensure end-user satisfaction.

- Implement quality checks early in the lifecycle.

- Contribute to business growth and outcomes.

- Detect software defects before go-live.

- Protect the corporate image and brand.

- Increase quality awareness across all disciplines.

- Reduce overall application cycle times by reducing waste.

Plan your goal and never lose sight of it. Automation is a means for achieving something. Analyze every step against that goal instead of boiling the ocean. Setting the right goals means establishing specific outcomes in certain time frames.

Globality set out to accomplish the following software quality goals in six months:

- Improve product quality by increasing test coverage by 50%.

- Implement quality checks early by adding automation into its sprints.

- Free up manual testers for more exploratory and usability testing.

- Set up cross-browser testing.

02 – Prioritize your automation efforts— what to automate and what not to automate

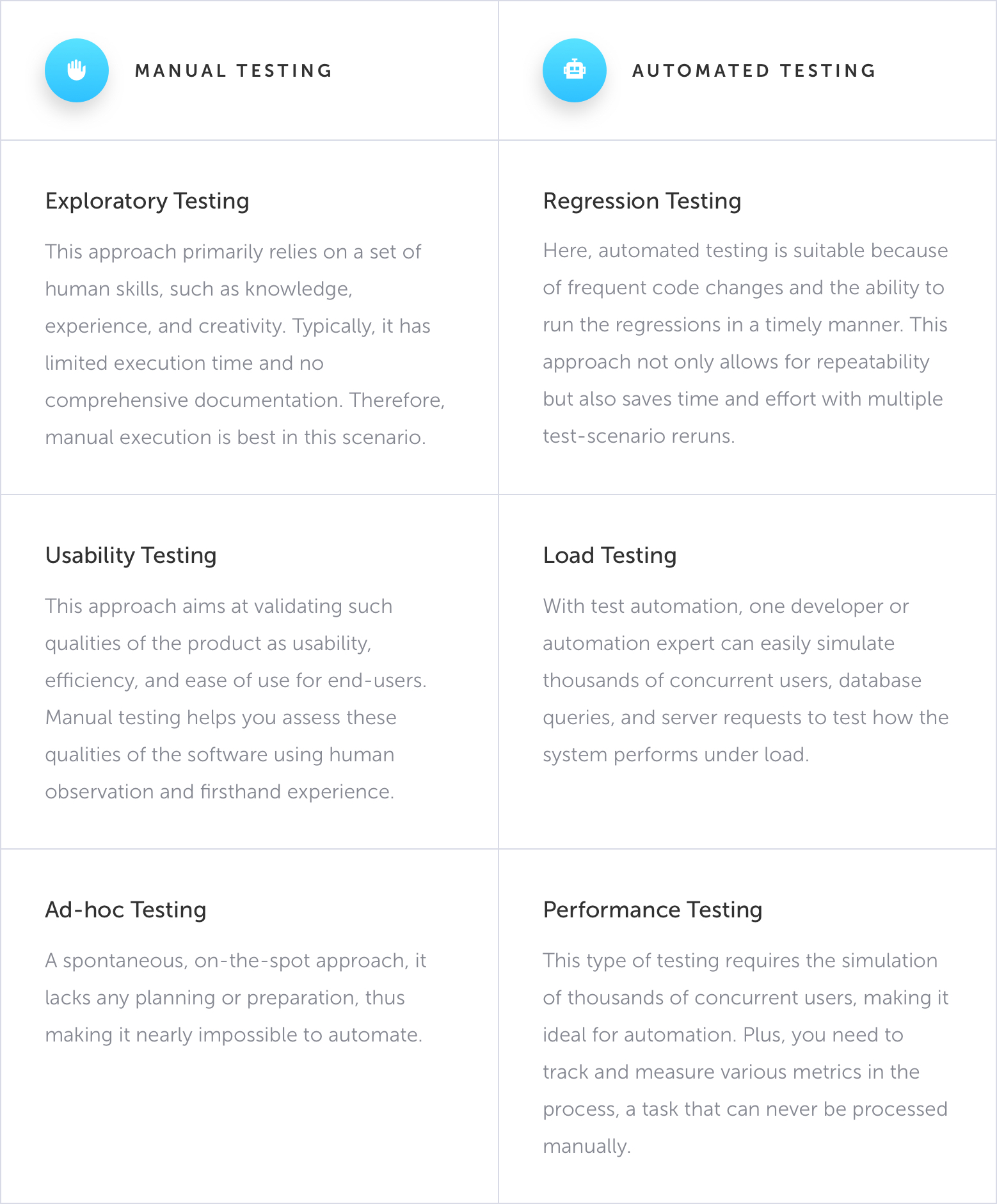

Find the right time to introduce automation into your journey; identify the right quality level and then prioritize your testing around it. Augment automation with manual testing. Identify the things you do most frequently, and have enough resources to automate those tasks. One hundred percent automation is unrealistic; assume there is going to be some manual testing. Not everything should be automated. What’s driving revenues, user flows, bottlenecks, etc.?

You are better off testing some parts manually. While automation might be able to test those areas of the application, the effort of authoring and maintaining those areas of the code is too high. Take the time to create building blocks. If you do it right, then a big portion of your test will involve plugging the right building blocks together. It’s easier to maintain, use, and extend coverage.

What is the best approach to help you decide?

- Risk-based approach – Identify user flows that are critical to your business or areas of the application that are more stable. For example, if 90% of your users use the same set of features, you might want to automate testing for that feature set because any issues would affect 90% of your user base. Another example is the checkout process, which is a revenue-generating flow.

- Conversation-led approach – Most context-driven software quality professionals are subject matter experts who know the systems they test inside and out. Having these team members consult with developers or testers to identify repetitive areas for automated testing can go a long way toward understanding where automation can add value and where it can’t.

03 – Streamline QA and dev work when change is constant

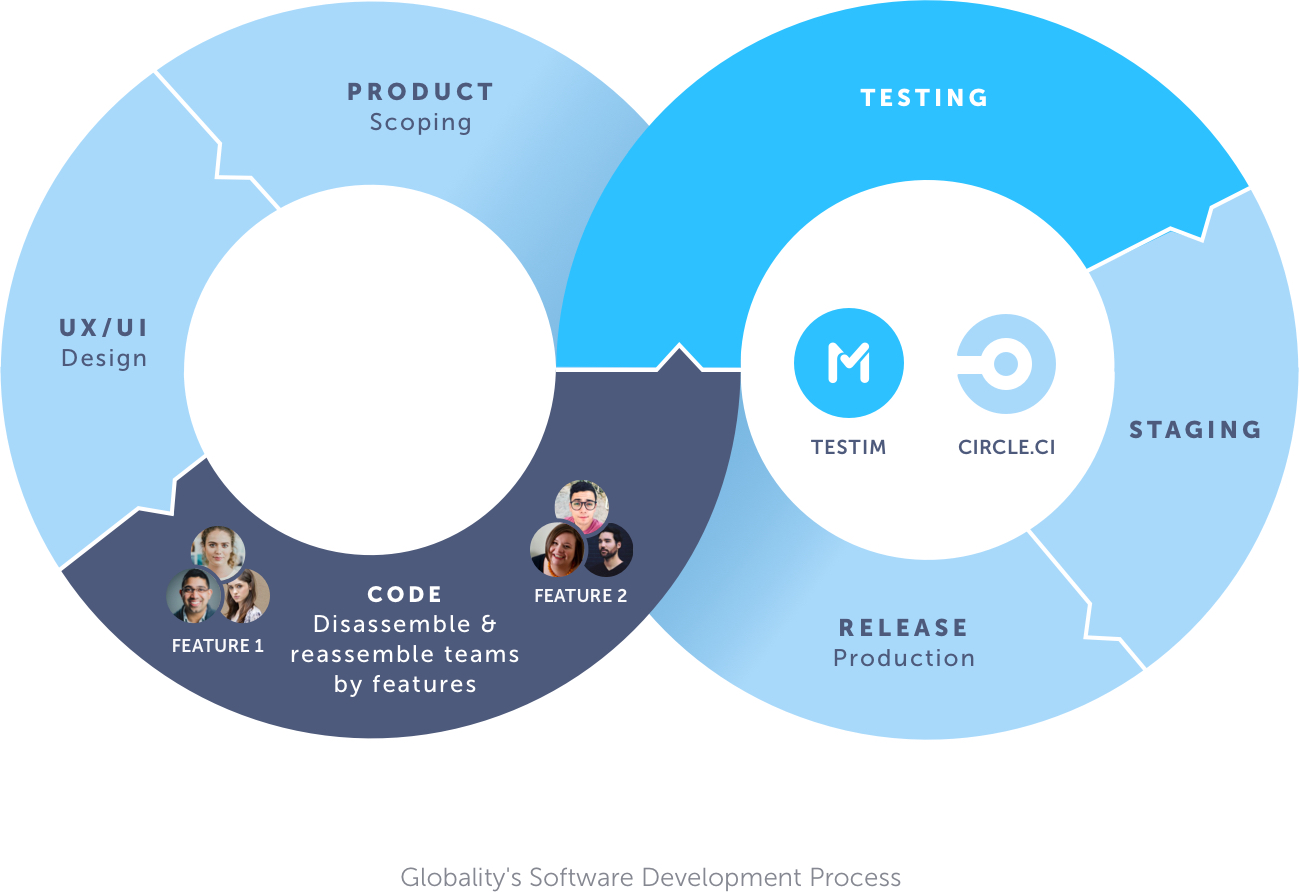

Globality has a unique approach to the structure of its R&D organization. It assembles and disassembles groups of engineers based on the tasks at hand. For example, when it needed to develop a payment system, it created a group that worked together for a few weeks. Once the task was completed, the team was disassembled.

QA is an integral part of the software development process. Groups are assembled based on specialized tasks and skill sets. QA engineers are an integral part of each group because of Globality’s emphasis on quality. Its head of QA is involved in the process at the design phase, thinking about the best way to test a new component of the application.

There are a number of environments: dev, testing, staging, beta, and production. Once a week, developers pushes the changes to testing, and QA begins. Some of the testing challenges they encountered as they set out to accomplish their goals included the following:

- Change as a muscle—product, groups, and users are constantly changing.

- How to balance progression with regression.

- Functionality is disconnected from the content.

- Content changes the interactive dialogue, making it difficult to create fix tests.

Testim’s Impact on Globality’s Software Quality Initiatives:

Globality tried to automate its functional and end-to-end testing for two years using Selenium. “The amount of features we had plus the amount of maintenance made it too time-consuming to continue with Selenium,” said Zeevik Neuman, Head of QA for Globality.

The wow moment was when Testim.io took our most complex flow, the project brief, and automated that in a few days. We plugged that into our pipeline and quickly saw that maintenance was much more manageable

RAN HARPAZ, CHIEF TECHNOLOGY OFFICER FOR GLOBALITY

Globality’s first objective was to automate the product’s entire happy path. It was able to complete this task using Testim.io in under a month. In just thirty days, it was able to increase test coverage by 30%. It then began testing different browsers and quickly started detecting bugs. As teams continued assembling and disassembling, Testim made it easy for developers and QA to ensure quality at all times.

Harpaz stated, “Now, I can say with confidence that I am testing every aspect of the system, and we’re releasing with confidence. Decisions are made on facts, not feelings.”

There used to be a misconception that automated testing was not as easy as “set it and forget it,” where you can author a test once and let it run on its own moving forward. Before AI, this was true. Developers and testers used to spend up to 50% of their time regularly maintaining the automated testing scripts alongside the application source code; failing to do this led to false test results.

Now, with AI, testing is easier than ever before. Testim uses AI to speed-up the authoring, execution, and maintenance of automated tests.

04 – Use AI to test an AI-based system

Testing AI systems is not easy. The challenge is managing the constant change because a learning system will change its behavior over time. How do you predict the outcomes? What’s correct today may be different from the outcome of tomorrow, which is also correct. A software quality professional will require the skills to interpret a system’s boundaries. There are always certain boundaries within which the output must fail. To make sure the system stays within these boundaries, developers and testers must not only look at output but also at input.

When developing an AI solution, the testing task is a vital activity. Whoever performs this task must ensure that the functional and nonfunctional requirements are fulfilled. How is this different from testing traditional software? Because of the complexity of managing change, the software’s behavior is much harder to predict. The complex functioning of an AI system has many more input values that must be tested to verify the outcomes.

Some commonly asked questions when it comes to testing AI include the following:

- What are the acceptance criteria?

- How can we design test cases that test those criteria?

- Are there different data sets for training data and test data?

- Does the test data adequately represent the expected outcomes?

- Is the training data biased?

- What is the expected outcome of the model?

Summary

01. Plan your goals

Never lose sight of them—automation is a means for achieving something.

02. Identify the right quality level

Then prioritize your testing around it.

03. 100% automation is unrealistic ROI

Assume there is going to be some manual.

- Automate the repetitive parts.

- Identify the user flows that are critical to your business.

- Focus on areas in your application that are stable.

04. Allocate time in every sprint to automate

Enforce that with a process.

05. Constantly measure quality and velocity

To ensure you’re achieving your goals.

06. Take the time to create building blocks

It’s easier to maintain, use, and extend coverage.