You can’t go anywhere today without hearing the term “artificial intelligence” (AI). It is in the vehicles we drive, the flight systems of the commercial aircraft we fly, and the commercial systems we interact with daily. It’s also in the vocabulary of most IT professionals these days. But precisely what is AI? In simplest terms, artificial intelligence is the development of computer systems and software that perform tasks that usually need human intelligence to complete. These tasks could be creating written content, decision-making, or identifying content on a page. You will often see the term “generative AI”. For the purposes of this discussion, this refers to the ability of systems and software to generate text, images, data, and other content.

There is a significant difference between AI and machine learning (ML), even though you often hear the two used together (AI/ML). While AI is leveraging software to make decisions that usually require human intelligence, ML is the ability of a system or software to acquire knowledge and improve the quality of the AI outcomes. Machine learning adapts to the environment through experiential learning.

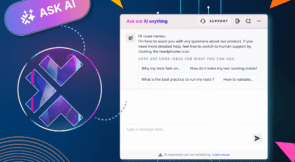

AI is now being promoted as the next big wave of test improvement in the testing space. Companies have been augmenting traditional quality assurance tools with the ability to improve test case creation, focusing on built-in quality. Test Management for Jira (TTM) is one example of a testing solution that enables the test designer to leverage AI to rewrite and augment the test cases they write. Let’s look into AI for test creation and see if it is worth the hype.

Is AI a game changer?

Over the last several years, fundamental transformations have happened in the test case development and management space. Artificial intelligence and machine learning are poised to be the next generational change. Testing software vendors have been leveraging predictive analysis for the past decade to improve testing outcomes. The improvements have been primarily focused on identifying defect density locations in software under test and allowing running tests across multiple platforms simultaneously.

With the emergence of AI in our society, vendors are finding new ways to leverage the technology to advance the testing disciplines. I believe most of us in IT agree that we won’t see AI replacing the need for testers anytime soon, but it could improve the ability of testers to expand test coverage and improve test effectiveness. I have never heard a company tell me their testing team is way too efficient, too fast at getting work done, and too thorough in finding defects. AI presents the possibility that testing resources can significantly improve all three of these, and keep the Agile team moving at the speed of the business. AI can help an average testing team become an excellent testing team, and excellent testing teams expand their impact. In that sense, AI has the potential to be a game changer.

The positives and negatives of AI

With any technology, there are both positives and negatives. The goal is to have the positives far outweigh the negatives to the point that the negatives are irrelevant. AI is full of positives for the test professionals in your organization. Testers can use AI to rewrite their test cases and test suites to improve readability and completeness. Artificial intelligence can develop test cases from a list of scenarios. AI can enhance the readability of defects and improve the understanding of the developers who must resolve the issue. Testim uses AI to provide self-healing capabilities for test automation and therefore, reduce maintenance costs. As the application changes, AI can identify the changes and seamlessly update the scripts so that they don’t break. This reduces the amount of maintenance needed on scripts, and improves the pass rates of automated tests. All of us have had the experience of running a test suite only to have half of the tests start failing due to changes to the application. Today’s test engineers can leverage AI to make their test suites more robust.

Artificial intelligence, however, has the potential to have negatives as well. We have all read news articles where students have used AI to write their term papers. While this isn’t a problem in test case authoring, the over-dependence on AI may lead some testers to lose their analytical mindset and perspective. This degrades the ability of the company to depend on its employees to drive quality outcomes.

Another potential negative of AI is something I call “artificial bias.” This is the bias that is built into the intelligence model itself. If we use the example of modern politics, we can see that companies build tools to analyze the sentiment of social media posts and remove objectionable content. The problem is that the tools are biased toward one particular view. The same principle can apply to AI, which is used to improve testing or development. If the system or software has been developed with a particular bias, completed pieces of the picture could be missing. In the early days of testing, we talked to people about the difference often between a developer who tested and a functional tester. A developer often just ensured that the code they wrote did everything it was supposed to. A functional tester’s approach went beyond the positive and focused on ensuring the code didn’t do anything it wasn’t supposed to do.

Bias isn’t bad or good. It is the implementation of that bias that determines its value. If your AI has a bias for quality, that is probably a good thing. It is probably bad if it has a bias for speed over quality. Just be aware that bias exists in artificial intelligence and be cautiously optimistic about its use.

What AI does well and not so well.

AI does so many things well that it would be too much to discuss here. Instead let’s focus on one area of caution with AI and testing that we should consider. You have heard the saying “garbage in, garbage out.” We used that saying with software to indicate that the quality of the outcome was directly tied to the quality of the input into the system. The same holds true for artificial intelligence. You can develop an AI engine that can augment test cases and improve test step language; however, it can’t overcome the lack of complete information input. No AI system can currently anticipate what you don’t tell it. It can interpret what you give it and generate great outputs. Don’t be lulled into an artificial sense of completeness. Because the test case you generated with AI has expansive steps and expected result descriptions, don’t assume the test is complete. If you fail to provide the right inputs to the AI engine, you will generate beautiful, bad results.

Conclusion

Artificial intelligence is a powerful and positive capability for testing groups to leverage. Not in place of good test strategy, but to augment and improve test design and test resiliency. AI doesn’t improve testing; it provides the potential for companies to vastly improve their testing capabilities. Tell us about your experience leveraging AI to improve testing outcomes. It would be great to hear your feedback and address any questions you might have.